| previous | 2025, week 01 (Monday 30 December 2024) | next |

There is no technical newsletter this week.

| previous | 2025, week 01 (Monday 30 December 2024) | next |

There is no technical newsletter this week.

19/12/2024-25/12/2024

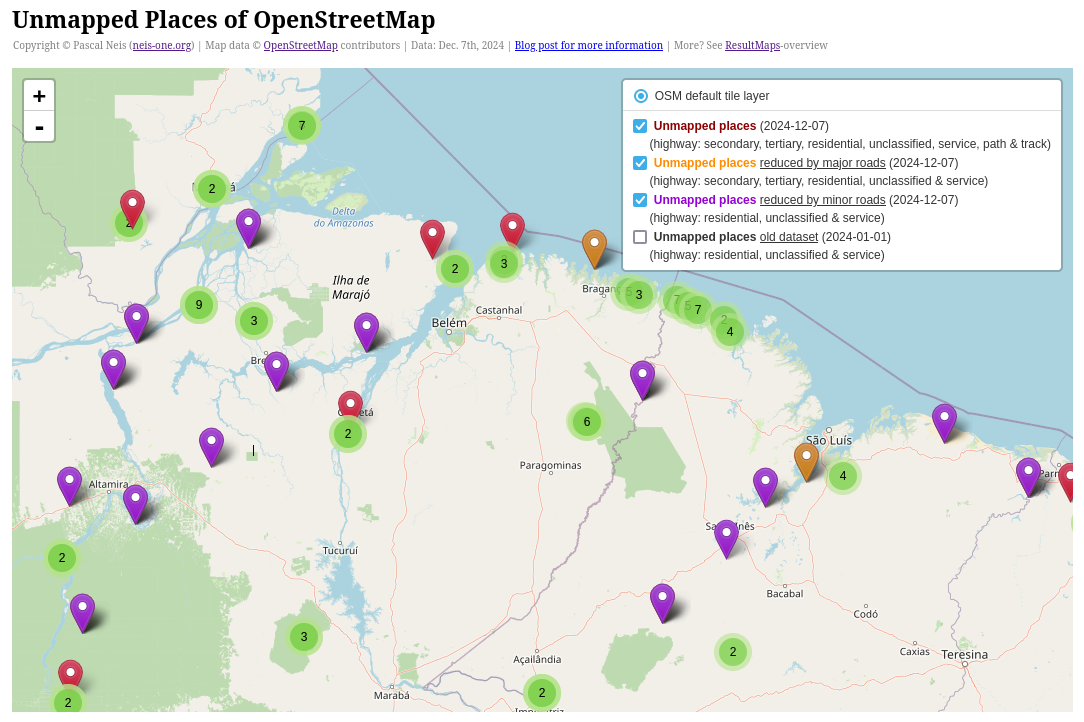

“Unmapped Places of OpenStreetMap” [1] | © Pascal Neis | Map data © OpenStreetMap contributors

Note:

If you like to see your event here, please put it into the OSM calendar. Only data which is there,

will appear in weeklyOSM.

This weeklyOSM was

produced by MatthiasMatthias,

Raquel Dezidério Souto, Strubbl, TheSwavu,

YoViajo,

barefootstache,

derFred,

mcliquid.

We welcome link suggestions for the next issue via this

form and look forward to your contributions.

The remote terrains, limited public transport options, expensive private travel and unpredictable climatic conditions make travelling to the Northeast a challenge. Although these factors have helped preserve the region’s natural habitats and diversity; making it a biodiversity hotspot and a dream destination for visit. When both of us learnt of this possibility to explore Dehing Patkai National Park (DPNP) under Wiki Loves Butterfly (WLB) Project run by the very own Wiki Butterfly lady Ananya Mondal we grabbed the opportunity.

The Beginning: Embracing the Challenge

Dehing Patkai National Park (232 Sq Km) is part of the Dehing Patkai landscape (575 Sq Km), which is the largest and only remaining stretch of lowland rainforests in India. These Rainforests are by the foothills of Himalayas called as “Patkai” and have Dehing River, (one of the largest tributaries of Brahmaputra) flowing by the foothills, therefore get the name, Dehing Patkai.

Two three months before the trip we started with our ground work to know this place better. We spoke to friends, looked up on the internet; made more friends online, gathered every little bit of information from them, prepared our butterfly checklist and wishlist, found a local guide, booked our tickets, paid the token advance and waited for the day to begin our travel.

Day 1: From Guwahati to Rani

Finally, the day arrived, we took early morning flights on 25th September 2024 to reach Guwahati from where our adventure together began. First on the list was visiting Rani, a local butterfly hotspot very close to the airport. We spent a couple of hours here and were lucky to capture quite a few butterflies, including some lifers for both of us.

Train Journey to Tinsukhiya: A Wet Welcome

Happy and content with the day, we packed up and took the evening train to Tinsukhiya. This was where Dehing Patkai National Park is and we were going to spend coming 5 days. A lashing rain welcomed us on the railway station.

Arrival at Naharkatiya: Meeting Our Guide

Our plan to take public transport outside for reaching our homestay in Naharkatiya from here went on hold. Killing time on the railway station we heard the announcements about the right time of a local train. Quickly we approached the enquiry counter, checked if there is any train going to Naharkatiya and there it was. We confirmed with our guide once and boarded this around 7 am. The local train took an hour and half to reach Naharkatiya. The rain was now reduced to a drizzle. Debojit dada picked us up and in 15 minutes we reached his home, our home stay.

Dehing Patkai: The Jungle Adventure Begins

After a quick fresh up and breakfast we were ready for the adventures of the day. First, we hitched a ride on sand truck to arrive at Dehing Patkai. Standing on top of truck we spotted the entrance gate from a distance. It looked just so welcoming to us. A step inside the gate and looking at the towering Hollong trees (this is also state tree of Assam) we knew exactly why these lowland rain forests are called the “Amazon of the East”.

A Birdwing and a Red Helen butterfly split both of us into two directions. The butterflies flew away and we both followed the guide on our trail for the day. We must have barely walked for 5 minutes when the nice cool atmosphere became wet. It started pouring heavily. We spent rest of the day hoping that rains might stop at any moment.

Another happy realization came our way: why this is a rain forest, once it starts raining there is no stopping. We could still sense the richness of the habitat as in-between rains we got to spot forester, bushbrown sp, red eyes, archdukes and Jungle Glory etc. It wasn’t the right day to explore the habitat fully.

Day 2: Sunshine and Butterfly Bonanza

Day two brought sunny weather and the best butterfly sightings. Like previous day, we hitch hiked another truck experiencing a ride straight from the amusement park. We were riding on back of a truck observing the moving jungle and flying birds, butterflies and branches coming close to our head making us duck down right in time. Some filmy songs automatically started coming out of both of us and we had the best time balancing ourselves, giggling and laughing out loud at the same time.

The day was filled with great butterfly activity, we could not move around much as at one spot we got just so many butterflies giving us good photo shoots. We were especially happy with the crow species. Unless you get the upper-side, its difficult to identify then and here they were inviting us to take photographs giving both the upper and underside. Also, the chocolate royals here gave us a chocolaty treat, there were just so many of them, few decided to hitch hike on our shoulder, head, camera etc.

The Rain Gods: Dehing Patkai’s Eternal Downpour

The following three days again the rain Gods kept reminding us that we are in Dehing Patkai, the lowland rain forests. Bowing to them, taking them on our head, face and shoulders we managed to capture few more butterflies from our wish list. Overall, in 5 days, we documented over 135 species of butterflies from Jeypore Rain Forest, Saraipong Range and Jokai (this is a reserve forest and not part of DPNP)

The Encounters: Connections Beyond Butterflies

In between butterflying we feel lucky to having met Satyendra Dutta at his residence one evening. In our preliminary research about this area, his butterfly posts kept our adrenaline and resolve high to plan this trip. We not only received a warm welcome at his home but an entire tour of house with butterfly hosts and nectar plant, stories behind some exclusive captures and a lot of interesting photographs taken by Sir over the past couple of years exploring Dehing and nearby areas. While we got talking like long lost friends Ms Dutta turned out a quick delicious dinner for us.

On our way back, one day, we also had an opportunity to meet our Guide, Debojit Dada’s family in Digboi. His parents have a small garden outside their home and here again we ran around with our camera and found a lifer, a Bush Hopper, resting quietly in their sugarcane plant. The elderly parents were quite amused to see two girls from far away land visiting Dehing Patkai for butterflies. They spoke about things that we could not fully understand due to language barrier still from the smiles and gestures, we gathered how fortunate we were on this trip. A small insect, a butterfly helps us explore and experience the rich and varied diversity our country has to offer us.

A Dream Realized: The Future of Butterfly Conservation

Based on our short visit, we believe that conducting year-round monitoring in these areas will yield valuable photo documentation especially the early stages which are hardly documented or studied. Our photographs from the trip can be found on Wikimedia under the tag “WLB Dehing Patkai National Park”

The trip was possible with guidance, support and funding from Wiki Love Butterfly, a digital conservation and scientific-based field documentation project aiming to improve Wikimedia’s coverage of butterflies in the North Eastern States of India. Our heartfelt thanks to Ananya Mondal for trusting us and encouraging us at every step to realise this dream to reality.

News and updates for administrators from the past month (December 2024).

| Archives |

|---|

| 2017: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2018: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2019: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2020: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2021: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2022: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2023: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2024: 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12 |

| 2025: |

Co-authored by Ian Ramjohn

As the world grows more urbanized, urban and suburban areas provide a barrier to wildlife reliant on increasingly fragmented natural habitats while also providing new opportunities for species that can tolerate close association with humans. This fall, Brooklyn College students in Tony Wilson’s Principles of Ecology course brought their classroom content to Wikipedia to improve how the encyclopedia covers both of these interconnected topics.

“While the internet is a rich source of information, primary scientific sources are typically very dense and written in jargon that is difficult for the public to understand,” explained Wilson. “At the same time, many of the webpages, blogs and tweets that the public see on the internet are provided without attribution, creating potential confusion and discord. Wikipedia has built a unique brand associated with ‘knowledge integrity’, with an integrated network of writers and editors working to ensure that the information provided is truthful and provided without bias. ”

At its best, Wilson explained, Wikipedia offers an invaluable service in providing accessible, user-friendly and accurate information in ecology, environmental science, and a range of other fields.

Working as individuals and in groups, Wilson’s 21 students contributed nearly 31,000 words and more than 200 references to Wikipedia, helping raise public awareness of correlated topics such as wildlife species and their habitats, climate change, and ecological conservation.

One trio of student editors focused their efforts on the urban evolution article, completely transforming nearly every section to outline the complex effects of factors like urban pollution, urban habitat fragmentation, and resource availability on urban evolution.

For some species, urban environments offer a space to thrive with few predators and a ready source of food. For others, urban environments are habitats rich in prey species, with few other competitors. But urban environments are always challenging places to live, with higher temperatures (due to the urban heat island effect), higher levels of air, water and noise pollution, and greater oxidative stress. These factors create an environment where species are subject to very intense selective pressures, driving evolution in species populations that inhabit urban environments.

As the three student editors expanded the article, they added detailed explanations of these selective pressures and highlighted examples of how species have evolved in response to them. Readers can now learn about how white-footed mice’s teeth have changed to handle the available food sources in New York City, and how raccoons have demonstrated increased behavioral flexibility and learning abilities by adapting to their urban environments. The student editors also discussed non-adaptive genetic changes in urban populations (like genetic drift) as a result of isolation and habitat fragmentation.

While some urban wildlife can use buildings, small parks, and backyards as habitat, other species depend on larger and more specialized areas. Coney Island Creek in Brooklyn is an important patch of habitat for many wildlife species that depend on wetlands. It also represents a heavily impacted and polluted area, illustrating how even wildlife habitat in cities can differ sharply from non-urban habitat.

This urban waterway is the product of centuries of human manipulation of a coastal wetland, coupled with recent efforts to restore parts of it. Three classmates, including biology major Arianna Arregui, added 4,400 words and 41 references to the Coney Island Creek Wikipedia article to enhance the coverage of its wildlife habitat, along with several of the aquatic and terrestrial species which depend on the area. Arregui’s group also added valuable information about the impacts of restoration projects, the limits of these efforts, and the many needs that still remain.

“Coney Island Creek holds significant historical and ecological value that often goes unnoticed,” explained Arregui. “With proper advocacy, meaningful changes can be made to support its restoration and inspire future ecological projects. As a native New Yorker, I’ve often felt that connecting with nature in an urban setting can be challenging, which is why Coney Island Creek resonated with my interests in urban ecology and environmental protection.”

While urban habitat provides opportunities for some species, for most species it represents a loss of habitat and a barrier that fragments them into smaller populations with higher risks of extinction. Rapid development in Florida resulted in the loss of wildlife habitat, and fragmented what was left into smaller patches that are capable of supporting fewer species. Species in habitat fragments are more prone to extinction, and if they go extinct locally, it’s very difficult for others to recolonize these now-vacant patches of suitable habitat. The role of the Florida Wildlife Corridor – whose Wikipedia article was transformed by another group of students in Wilson’s course – is to improve the connection between Florida’s state parks, national forests, and wildlife management areas.

By expanding information about the bipartisan legislative efforts that created these corridors and the conservation benefits that they provided, the students provided readers with a more complete understanding of their history and ecological role. The student editors also highlighted the challenges faced by the network and the ways in which these kinds of networks hurt wildlife by allowing invasive species to spread between protected areas.

Thanks to the work of Wilson’s students this semester, Wikipedia now gives readers a more nuanced understanding of the way wildlife interacts with expanding urbanization – and provides critical insights into what the future may look like in a warmer and increasingly urbanized world.

Wiki Education thanks the Horne Family Foundation for their support of this work to improve Wikipedia content related to species habitat, wildlife populations, and the impact of climate change.

Interested in incorporating a Wikipedia assignment into your course? Visit teach.wikiedu.org to learn more about the free resources, digital tools, and staff support that Wiki Education offers to postsecondary instructors in the United States and Canada.

Hello everyone, and welcome to the 26th issue of the Wikipedia Scripts++ Newsletter, covering all our favorite new and updated user scripts since 1 August 2024. At press time, over 94% of the world has legally fallen prey to the merry celebrations of "Christmas", and so shall you soon. It's been a quiet 4 months, and we hope to see you with way more new scripts next year. Happy holidays! Aaron Liu (talk) ~~~~~

![]() Got anything good? Tell us about your

new, improved, old, or messed-up script here!

Got anything good? Tell us about your

new, improved, old, or messed-up script here!

__EXPECTUNUSEDTEMPLATE__ magic word to exclude a

template from Special:UnusedTemplates! I wonder if

Wikipedia has a templaters' newsletter...We need scripts that...

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | Pushpa 2: The Rule | 2,707,690 | The much-awaited sequel to the 2021 Tollywood film, Pushpa: The Rise was finally released last week after three years of waiting. It stars Allu Arjun (pictured) in the titular role, paired with Rashmika Mandanna. The film recovered its ₹500 crore (US$59 million) budget within just three days, becoming the first Indian film to gross such a sum in such a period of time. | ||

| 2 | Kash Patel | 2,038,926 |  |

Formerly a federal prosecutor at the U.S. Department of Justice, he was selected by the president-elect Donald Trump to be the Director of the Federal Bureau of Investigation during Trump's second presidency. | |

| 3 | Wicked (2024 film) | 1,215,749 |

|

A well-received, star-studded theater adaptation (#7), offering a revisionist take on the Land of Oz where the Wicked Witch of the West is just a victim of prejudice and propaganda that decided to embrace the bad image painted upon her. Along with making lots of money - mostly in North America, where it passed the $300 million mark - Wicked is expected to become an awards contender. | |

| 4 | Syrian civil war | 1,090,108 |  |

A war that started when I was eight months old still continues to this day. Syria's civil war can be described as a hell on earth, with 620,000 deaths (half of whom are civilians) and millions of displaced folks. The reason it's this high is the recent rebel offensive (#9) that wiped out Ba'athist Syria, and Bashar Al-Assad with it, in less than 11 days. |

|

| 5 | Martial law | 1,036,797 |  |

Replacing civilian government with military rule and suspending civilian legal processes with military powers. Only reason it's here now is because, in what has been described as a "self-coup", "political suicide", and "stupid", South Korean president Yoon Suk Yeol declared it with no advance warning on December 3. Within two hours, 190 legislators forced themselves into the National Assembly Proceeding Hall (including Lee Jae-myung, who livestreamed himself) and unanimously voted to repeal it. | |

| 6 | Deaths in 2024 | 1,010,937 |

|

Quoting one of the biggest hits of this year by Bruno Mars and Lady Gaga: If the world was ending, I'd wanna be next to you If the party was over and our time on earth was through I'd wanna hold you just for a while And die with a smile... |

|

| 7 | Wicked (musical) | 994,074 |  |

The book adaptation that made a killing on Broadway before getting the film treatment (#3). The original Elphaba (Idina Menzel) and Glinda (Kristin Chenoweth) have cameos in the movie, and many countries that staged their own versions of Wicked brought the women who played the witches to dub their film counterparts (such as the two to the left, Mexicans Danna Paola and Ceci de la Cueva, in the Latin American Spanish version). | |

| 8 | UnitedHealth Group | 939,448 | This American health insurance provider, currently 8th on Fortune's Global 500, places higher than that of its CEO (#10), following his killing in NYC this week. Their alleged greed elicited contempt on social media, even so far as to view Thompson's death as justified, vindicating the thousands of deaths each year, due to the company denying health care coverage. | ||

| 9 | 2024 Syrian opposition offensives | 937,915[1] |  |

The Syrian opposition hadn't done a military offensive campaign since 2020, but with the help of allied Turkish-backed groups, decided to finally go all-in against the forces of Bashar Al-Assad. After taking back cities such as Aleppo, Homs and Palmyra, the rebels ended the week covered by this report invading the capital city of Damascus. By the early hours of Sunday, Assad fled to Russia, ending a totalitarian hereditary dictatorship that had ruled Syria ever since Assad's father had taken over in a 1970 coup. | |

| 10 | Brian Thompson (businessman) | 925,938 | The CEO of #8 left his Manhattan hotel at 6:45 in the morning of December 4 and was walking across the street to attend an investors' meeting. He didn't make it to the door: he was shot several times from behind and died thirty minutes later. He had previously received death threats, and his assailant from out of town had been staying in a NYC hostel for ten days. |

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | Bashar al-Assad | 4,026,589 |  |

Syria's controversial ruler for over twenty years, Assad and his government were recently overthrown in #4. He has fled to Russia, a country ruled by another twenty-year ruler, and given political asylum. What he plans to do is unknown. One thing is for sure: he isn't returning to Syria (#3) anytime soon. | |

| 2 | Pushpa 2: The Rule | 4,023,277 |  |

A sequel that comes three years after the first film, while a third film is already on the way. Released a week ago, Pushpa 2 has already collected ₹1,106 crore (US$130 million) to emerge as the second highest-grossing Indian film of the year, fourth highest-grossing film in India and the eighth highest-grossing Indian film (#5). | |

| 3 | Syria | 2,563,423 |  |

A civil war that has been going on for thirteen years was only the most recent part of Syria's decades-long sociopolitical chaos, and the conflict has regained international attention following the opposition taking over capital Damascus and ousting #1. The war is far from over, and with neighboring countries launching invasions, heavy fighting continues, and the country's future is an uncertain one. | |

| 4 | Syrian civil war | 1,822,405 | |||

| 5 | List of highest-grossing Indian films | 1,270,262 |  |

Indian cinema definitely has a important part in the International film industry. With #2 reaching new feats, it's no wonder this list made it onto the list. Out of the top 8 grossing films, four came from Tollywood, and three from Bollywood. Although Kollywood has actors with international fandom, unlike others, it couldn't make it past 15th place. | |

| 6 | Gukesh Dommaraju | 1,200,900 |  |

The world of chess welcomes its newest World Champion. Having played chess since the age of seven and won several championships throughout his career, Gukesh is only 18 years old, a remarkable age for a grandmaster. He defeated Ding Liren, who's nearly double his age, in a close World Chess Championship battle, narrowly winning by a single point. Checkmate. (Well, not really. Ding resigned before Gukesh could do that.) | |

| 7 | Asma al-Assad | 1,079,427 |  |

#1's wife, she has also fled to Russia along with her three kids. Despite being born in the UK and retaining its citizenship, the UK government has said that she isn't welcome and is considering sanctions. | |

| 8 | Killing of Brian Thompson | 1,057,985 |  |

Brian Thompson, a low-profile businessman and CEO of UnitedHealthcare, was shot dead in Downtown Manhattan, allegedly by Luigi Mangione. His exact motive is unclear: some say it was revenge for his family members' denied claims, while others say it was political. The killing itself was controversial, with some claiming it was justified and others offering condolences to Thompson's family. Whatever the case, the incident was one of the most famous killings of 2024, and Thompson has been added to #10. | |

| 9 | Brian Thompson (businessman) | 1,026,961 | |||

| 10 | Deaths in 2024 | 1,000,904 |

|

Now the Sun's gone to hell And the Moon's riding high Let me bid you farewell Every man has to die... |

For the October 11 – November 11 period, per this database report.

| Title | Revisions | Notes |

|---|---|---|

| Killing of Brian Thompson | 2263 | As mentioned above, the CEO of health company UnitedHealthcare was shot dead in Manhattan. Motives are unclear, but the alleged murderer, Luigi Mangione, has shown admiration for the Unabomber Manifesto. |

| Deaths in 2024 | 2029 | Along with Thompson, high-profile departures of the period included presenter Chuck Woolery, drummer Bob Bryar and director Jim Abrahams. |

| 2024 South Korean martial law | 1872 | South Korean president and PPP member Yoon Suk Yeol is not popular: he has had consistently low approval ratings, partly due to him stopping multiple corruption investigations into his wife, Kim Keon-hee. He has also struggled to do much since the National Assembly is controlled by opposition party DP. It seems that declaring martial law was a last-ditch attempt to keep power by Yoon and, considering he had told almost no one beforehand, his decision alone. This is the first declaration of martial law since the coup d'état of May Seventeenth, and to older Koreans, recalled the military dictatorship that South Korea had been under. Koreans immediately went into the streets to call for Yoon's impeachment and arrest. Legislators did try to impeach him, but their attempt failed since Yoon's party refused to cooperate. Still, Yoon is now banned from leaving South Korea. |

| Wicked (2024 film) | 1419 | In 1995, Gregory Maguire wrote a revisionist take on the Wicked Witch of the West, reimagining the Oz villain as Elphaba, a person targeted by prejudice and propaganda. Stephen Schwartz read it on his vacation and made a Broadway musical out of it, whose runaway success led to a film adaptation. Wicked was very well-received, having earned over $500 million worldwide and making appearances on many critics' "best of 2024" lists, raising its profile for awards season and expectations for Part 2 next November. |

| Bigg Boss (Telugu TV series) season 8 | 1141 | India has film industries for all its languages, so why not versions of Big Brother for them as well? |

| Bigg Boss (Tamil TV series) season 8 | 1132 | |

| Bigg Boss (Hindi TV series) season 18 | 949 | |

| 2024 UK Championship | 922 | York hosted this snooker tournament, won by current world #1 and 2019 world champion Judd Trump. |

| 2024 Jharkhand Legislative Assembly election | 870 | A few months after choosing their representatives for the central government, eight of the Indian states voted on their state assemblies. One of them was Jharkand, and the majority of the seats went to the Jharkhand Mukti Morcha, followed by the Bharatiya Janata Party that rules the country. |

| Donald Trump | 857 | He's returning to the White House. The next four years will certainly be eventful. |

| Brian Thompson (businessman) | 850 | A murdered CEO of a health company, and his tenure saw rocketing profits for increasing denial of medical care, including denying insurance payment for non-critical visits to hospital emergency rooms, and downright using artificial intelligence to automate claim denials. This led to many people not offering condolences for Thompson's death, but rather celebrating it. |

| 2024 United States presidential election | 826 | People are still trying to process all that happened, specially as the news show Biden's lame duck actions (which included a controversial pardon for his son Hunter Biden) and Trump appointing his upcoming cabinet. |

| 2024 Irish general election | 823 | The Emerald Isle chose the members of the 34th Dáil, and the Fianna Fáil remained the party with the most seats. |

| Gladiator II | 813 | One of the least requested sequels of all time (the protagonist of the first film is apparently not happy with it existing). Yet the return of director Ridley Scott to Gladiator, no matter if nowhere as compelling as the Oscar-winning original, is very watchable, with impeccable production values, thrilling action scenes, and an amazing cast, highlighted by Denzel Washington as the devious Macrinus. Hence Gladiator II earned positive reviews and made over $400 million worldwide, providing some return to the massive budget of at least $250 million. |

| South Vietnam | 809 | A few IPs are doing a lot of edits to the page on the country that the Americans supported in the Vietnam War, and that ended up incorporated by North Vietnam in 1975 to create the current nation. |

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

A paper in The Economic Journal, titled "Public Good Superstars: a Lab-in-the-Field Study of Wikipedia",[1] presents results from a nine-year (2011–2020) study of the motivations and contributions of English Wikipedia editors. From the abstract:

Over 9 consecutive years, we study the relationship between social preferences – reciprocity, altruism, and social image – and field cooperation. Wikipedia editors are quite prosocial on average, and superstars even more so. But while reciprocal and social image preferences strongly relate to contribution quantity among casual editors, only social image concerns continue to predict differences in contribution levels between superstars. In addition, we find that social image driven editors – both casual and superstars – contribute lower quality content on average. Evidence points to a perverse social incentive effect, as quantity is more readily observable than quality on Wikipedia."

The study operationalizes these concepts using data from several sources. The sample consists of 730 English Wikipedia editors who volunteered to participate in a 2011 online survey and experiment designed to gauge their reciprocity and altruism: Participants were classified as "free-rider", "weak reciprocator", "reciprocator" and "altruist" according to their decisions in a public goods game. A topline result here indicates, perhaps unsurprisingly, that among Wikipedians there are fewer free-riders and more altruists than usual:

[...] the overwhelming majority of our subjects behave either as full or weak reciprocators (38 and 47%, respectively). The proportion of free-riders (about 7% in our data) does appear lower than the proportion of 20-30% usually obtained with more standard subject pools, however. Similarly, more subjects behave as pure altruists in our data (about 9%).

Furthermore, the paper uses the concept of "superstar

contributors", defined generally as "highly regarded community members with

impressive contribution records"

, and operationalized in case

of Wikipedia as editors who have received a barnstar. Among these,

the editors who chose to display at least one such award on their

user page are classified as "social

signalers." (More precisely, the authors try to control for

the fact that editors who contribute more may be more likely to

display a barnstar simply because they are more likely to have

received one – e.g. by taking into account the size of the editor's

user page and the total number of barnstars received.)

The authors had already used this data in some publications which we covered here back in 2013 ("What drives people to contribute to Wikipedia? Experiment suggests reciprocity and social image motivations"). In the new paper, they also look these 730 editors' contributions over the period from 2011 to 2020, specifically

how likely editors are to delete (i.e., “revert”) the contributions of others without providing an explanation [...] Wikipedia contributors typically consider non justified reverts as highly uncooperative and harmful to the project.

Among other results, the authors

uncovered a surprising negative correlation between our measures of contribution quantity and quality at the editor level. Namely, the social signalers in our data, if they contribute significantly more content to Wikipedia, also contribute lower quality material on average. In practice, this means that, as vetted by their peers, social signalers contribute content that persists about 38% less revisions on average.

Two of several "interesting patterns" highlighted by the authors concern editors' age and education level (two of the demographic variables from the 2011 survey):

older editors appear more cooperative by two of our measures: (i) they tend to contribute significantly more content [...], and (ii) they are less likely to leave their reverts unexplained [...]

[..] editors’ level of education is strongly associated with the quality of their edits [...]. Out of an 8-points scale, each additional degree level yields an average increase of 6% in content persistence. This represents a sizeable number: all else equal, an editor moving from the lowest education level in our data (i.e., who did not complete high school), to the highest (i.e., earned a PhD), would thus see the persistence of their contributions increase by 48% on average.

From the article:[2]

"In this overview, we will discuss how to go about creating or editing an article on a mathematical subject. [...] We will also discuss biographies of mathematicians, articles on mathematical books, and the social dynamics of the Wikipedia editor community."

The authors (all experienced Wikipedia editors) aptly cover

various misunderstandings and pitfalls that academic mathematicians

might encounter when contributing to Wikipedia. (For example, the

"Writing About Your Own Work" section advises that "Rather than advertising their own

super-specialization, experts can make themselves useful by

explaining the prerequisites to understanding it. What articles

would a student read in order to understand the background and

broader context of your research?"

). Somewhat ironically, the

paper's first paragraph illustrates one such tension between the

conventions of academia and Wikipedia:

This essay incorporates with permission material from our pseudonymous colleague XOR'easter,[supp 1] who also contributed many suggestions during the writing process. By the extent of XOR’easter’s contributions, they would normally be credited as an author. However it was not possible in time to find a way to strictly preserve anonymity and assign legal copyright. All four contributors disagree with this exclusion. I regret its necessity — Ed.

The paper's title includes a rather cringe-y pun referring to the Principia Mathematica.

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

From the abstract:[3]

we introduce the SustainPedia dataset, which compiles data from over 40K Wikipedia articles, including each article's sustainable success label and more than 300 explanatory features such as edit history, user experience, and team composition. Using this dataset, we develop machine learning models to predict the sustainable success of Wikipedia articles. Our best-performing model achieves a high AU-ROC score of 0.88 on average. Our analysis reveals important insights. For example, we find that the longer an article takes to be recognized as high-quality, the more likely it is to maintain that status over time (i.e., be sustainable). Additionally, user experience emerged as the most critical predictor of sustainability."

From the abstract:[4]

"Using a large-scale examination of publicly available data, we assessed whether species across 6 taxonomic groups received more page views on Wikipedia when the species was named after a celebrity than when it was not. We conducted our analysis for 4 increasingly strict thresholds of how many average daily Wikipedia page views a celebrity had (1, 10, 100, or 1000 views). Overall, we found a high probability (0.96–0.98) that species named after celebrities had more page views than their closest relatives that were not named after celebrities, irrespective of the celebrity threshold."

From the abstract:[5]

"This qualitative discourse analysis of editors' debates around climate change on Wikipedia argues that their hesitancy to 'declare crisis' is not a conscious editorial choice as much as an outcome of a friction between the folk philosophy of science Wikipedia is built upon, editors' own sense of urgency, and their anticipations about audience uptake of their writing. This friction shapes a group style that fosters temporal ambiguity. Hence, the findings suggest that in the [English] Wikipedia entry on climate change, platform affordances and contestation of expertise foreclose a declaration of climate crisis."

Wikipedia has policies for a reason. We are trying to do something here, this is explicitly not just a place to hang out chatting and gossiping. A certain amount of decorum and respect is generally appropriate and this is a policy that has strong support from the community, even though enforcement is uneven at best. Policies like WP:CIVIL are intended to remind users that although nobody here is paid, this is basically a workplace. Maybe it's more like a Montessori school in that all work is self-directed and there is no deadline for completing it, but we still don't expect users to randomly attack one another or to post animated emojis in article space because they think it's funny.

Off-wiki criticism forums do not have these rules, that is their entire point. I'm mainly speaking of Wikipediocracy (WPO) here, as it is the only one of those forums I participate in. Some of the other forums truly are hate or attack sites, as opposed to being mostly focused on genuine criticism. So, a person might say something on WPO that they would never say here, because it would be outside policy to do so. This is not a crime, although in some extreme cases it could and should lead to on-wiki sanctions.

Some folks on these external sites like to come up with nicknames based on a user's on-wiki name. Obviously, this is not allowed here. There is also arguably little to no value in it, especially if endlessly repeated every time the user in question comes up. Sometimes they say things like "<username> is a total idiot who should have their head examined" which, even if true, is unlikely to be seen by the user in question as useful feedback. Part of this trend may be due to the fact that, by and large, the person so targeted is not present in the discussion, but as has become very, very apparent; sometimes they might be lurking, reading the discussion without participating in it. In my opinion, it just isn't helpful, but it equally is not an excuse for the user so targeted to start doing things on Wikipedia that violate Wikipedia policies.

I would say that some of these folks need to grow up, but, in many cases, so do the targets of their comments. If you want to engage someone who is criticizing you, step up and do it in the place where they are doing so. If you don't want to do that, your remaining option is to let it go, not to start attacking them on-wiki.

Nobody can deny that there is material posted on WPO that, were it posted on Wikipedia, would violate the outing policy. Wikipedia's outing policy is substantially stricter than pretty much the entire rest of the internet. It is forbidden to speculate on the identity of other users in any way, including other online identities on other websites that may clearly be the same person, unless that person has disclosed that connection on Wikipedia itself. Whether one agrees with it or not, this is policy and should be adhered to.

WPO does not have any such rule. Most websites don't. It isn't generally considered a horribly invasive act to notice that User:Steve D edits content about the band Billy and the Boingers, and that some guy on Twitter or whatever named Steve Dallas is, in fact, the band's manager. Saying as much on a completely different website manifestly cannot be considered a violation of any Wikipedia policy. Although it might be preferable that, instead of posting it on a forum, the information was sent to paid-en-wp@wikipedia.org, we cannot obligate users of other websites to do so.

Note that this is not the same thing as doxing, which involves posting non-public personal information about someone without their permission.

The rest of this is about my specific situation; if you don't care about that, you can stop right here.

This is a bit more personal. In November 2023, the Arbitration Committee, of which I was a duly elected member at that time, informed me that they were considering removing me from office due to disclosures I had made on WPO. Plenty has been written about that elsewhere; look it up if you want to know more. The short version is that I did what they said I did: I disclosed certain material from ArbCom's mailing list publicly on WPO. In a surprisingly-quick decision for the committee, I was not removed per se; the committee went with the odd decision to suspend me for six months, despite the fact that my term was ending in a month anyway, and I wasn't running for reelection. I could accept that, even if I didn't quite understand the reasoning behind a suspension when I was done anyway. What I did (and still do) have trouble accepting is that they also revoked my Oversight and Volunteer Response Team access when there was no hint of any sort of wrongdoing there.

Every arbitrator is granted these by default — along with CheckUser access — but I'd already had the Oversight permission for twelve years on my own merit, and there had never been any serious issues with my use of it, or with keeping material I saw in the course of using it confidential.

I don't think so.

Functionaries are appointed by the Committee, and they all know it is their responsibility to keep their mouths shut about what they see when using these powerful tools (which can certainly include personal data). It was, and is, important that such material be held in the strictest confidence.

Arbitrators are elected by the community to represent them at the highest level of dispute resolution. The community knew who I was, and what to expect, and I ran on a promise of trying to be more transparent when possible. I did what I did when I thought there was good reason to do it, even if it technically violated the level of privacy one normally expects from an email discussion. I wasn't there to toe the line and do what the other arbs wanted, I was there to do what I was elected to do — not once, but three times. There absolutely was not any personal information of any kind in any of the material I disclosed. It's an important distinction, and I would never release the kind of extremely sensitive material one routinely sees when using these tools.

What is important here is not that anyone agrees with my view — they only need to ask if they believe that I genuinely feel the way I say I feel about it.

I've apparently failed repeatedly at making that point to the Committee, possibly because I don't think I've ever put it quite like that. Maybe next year I'll try again. It is important work, and I did it for a very long time.

Wikipedia has passed its first audit required due to its designation as a Very Large Online Platform under the EU's new Digital Services Act (see prior Signpost coverage). The audit was conducted by an outside entity, named Holistic AI, for the Wikimedia Foundation, as reported by Holistic's press release. The Foundation has published the audit, its own "Audit Implementation Report" and related documents on its website.

The audit report found some non-material non-compliance in the area of providing the Terms of Use in every official national language of the EU member states, to affect Bulgaria, Croatia, Czechia, Greece, Italy, Poland, Portugal, and Romania, and further requested that Wikimedia-controlled translations be made for all languages other than English, rather than community translations, in order to avoid unintentional changes in meaning and to provide email emergency and other regulation-relevant contacts directly, rather than through separately linked web pages. The auditors also noted:

Another recommendation is to establish a separate ToU for the EU, free from references to non-EU legislation or mechanisms, to better align with the access requirements under Article 14.

— Holistic AI 2024 DSA audit, Article 14 response, page 25

This recommendation that the Wikimedia Foundation ought to provide nation-specific Terms of Use appears to have been rebuffed with this response from the Foundation, referring to a singular ToU: "The Wikimedia Foundation will review the ToU to make it less US-centric and to ensure contact information is easily accessible."

In its European Policy Monitoring Report for November 2024, Wikimedia Europe notes that besides this audit, Wikipedia's annual obligations under the DSA also include

A Systemic Risk Assessment and Mitigation (SRAM) Register. This is basically a living document where the WMF identifies risks and keeps track of mitigation measures.

Wikipedia, according to the documents, meets the obligations under the DSA, albeit improvement recommendations are made. The systemic risk register lists “disinformation” and “harassment” as immediate priorities with corresponding mitigation measures.

In other EU news, Wikimedia Europe reported that, on November 27, a new European Commission was officially approved by the European Parliament, and started its five-year term on December 1. As kindly highlighted by Wikimedia Europe itself and euronews, the Commission – once again led by Germany's Ursula von der Leyen – includes some faces who will likely become more and more familiar to tech experts and Wikipedia members in the next few years.

First up, it's Finland's Henna Virkkunen (EPP), who will serve as Executive Vice President and European Commissioner for Digital and Frontier Technologies. After serving two terms as an MEP and being elected for a third term last June, Virkkunen will be tasked with managing the Commission's digitalisation strategy, including matters such as the Copyright Directive and the implementation of the Digital Services Act (DSA) – which she had led the EU Parliament's work on – as well as the European Media Freedom Act (EMFA). Then, there's Bulgaria's Ekaterina Zaharieva (also from the EPP), who will be the European Commissioner for Startups, Research and Innovation, whose primary targets will include improving conditions for start-up and scale-up companies and setting up a new research council on AI.

In their analysis of Zaharieva and Virkkunen's November hearings, Communia highlighted a few key insights on copyright policy:

"From a copyright perspective, there was nothing surprising or unexpected in the hearings. While we understand the heavy emphasis on generative AI, we would like to see more work being done to promote the public interest.

The commitment to the idea of a 'fifth freedom' for knowledge and the support for a European Research Area Act are commendable. We fully support this proposal, but would encourage the incoming Commission to be even bolder and, in addition, propose a more comprehensive intervention – a Digital Knowledge Act – that benefits all kinds of knowledge institutions, including universities and research institutions, but also libraries, archives and cultural heritage institutions. If we want to unlock the full potential of European knowledge institutions, we need to address the barriers that currently prevent them from fulfilling their public service mission, including in the field of copyright."

The Wikimedia Europe "deep-dive" article also shed a light on two more relevant Commissioners for the organization: Ireland's Michael McGrath (RE) and Malta's Glenn Micallef (PES). McGrath will serve as the European Commissioner for Democracy, Justice, and the Rule of Law: although his portfolio is quite wide, The Irish Times reported that he will have responsibility on developing the Digital Fairness Act – which aims to tackle dark patterns and influencer marketing – and improve co-operation between national data protection regulators. During his hearing, McGrath stated that he would "deepen the work to counter foreign information manipulation and interference and disinformation", among other tasks needed to "put citizens at the heart of our democracy".

On the other hand, as the new Commissioner for Intergenerational Fairness, Youth, Culture, and Sport, Micallef will contribute to address the issues of child protection, cyberbullying and "addictive design" that might trick users and consumers into increasing their engagement on digital platforms. The youngest member of the Commission by far, Micallef pointed out during his hearing that "social media's ability to amplify voices and movements has made them a powerful tool for youth engagement, but navigating them requires critical thinking."

In their own conclusions, Wikimedia Europe noted how the work and the positioning of the new Commission on each of the aforementioned topics might be significantly impacted by the thin supporting majority and the rise of new right-wing political groups in the Parliament, writing quote:

– O"For Wikimedia, the new political landscape comes with some unforeseeable risks, but could also open up new avenues.

For the time being, it seems that the EPP [group], and its chair, Manfred Weber, can play the pivotal role. Developments at the national and international level, and a different political stance of the S&D group, could overturn this situation. It would not be bold to say that the EU has challenging years ahead."

On December 5, Wiki Loves Earth publicly announced the top 20 of the best pictures submitted by users around the world for the 2024 edition of the annual photographic contest, which historically aims to highlight the conservation areas of each participating country and collect new images under free licenses.

According to the official data, a record 56 countries and territories took part in this year's competition, with more than 80,100 submissions from over 3,800 different uploaders. Germany registered by far the highest number of submissions, with 16,921 total uploads; Ukraine ended in second place with 6,438 uploads, while Senegal came in third (just) with 3,774 uploads.

After each country had chosen their local winners, the jury of WLE, formed by professional photographers, experts, and Wikimedians, gathered to select the 20 international winners of the contest, divided as usual in two categories: "Landscapes" (including individual trees that are considered natural monuments) and "Macro/close-up" (involving pictures of animals, plants and fungi). Two more special sections dedicated, respectively, to human rights-themed images and video nominations were also hosted.

You can discover the international winners of WLE 2024 here and here. Enjoy! – O

Since the latest Signpost issue covering them in October this year, there have been three Wikimedia Foundation bulletins: early November, late November, and early December.

The 2023-24 Fundraising report was published. Fundraising grew by 0.51% since 2022-23 (in comparison to a 2.7% growth from 2021-22 to 2022-23) to reach $170.5 million. The number of unique donations increased by 2.5 million to total 17.4 million. The WMF published a post about the 2024 Fundraising Campaign in English.

The WMF Board of Trustees met in August 2024, voting to dissolve its Talent & Culture Committee (BoT minutes can be read at the Foundation wiki). Tulu Wikisource and Moore Wikipedia went live.

An open call went out for Wikimania 2027 and 2028. Any communities interested in hosting should make an 'Expression of Interest' by 27 January 2025.

Charts Extension, planned to replace the abandoned Graphs extension, was enabled on Commons and three pilot Wikipedias. The Graphs extension had been disabled sitewide in April 2023 over security concerns. – S

As part of the Asian News International vs. Wikimedia Foundation saga, the Foundation published an update on 3 December 2024. WMF staffer Quiddity (WMF) clarified that the Foundation had delivered a summons to the three editors involved in the case without disclosing information about them to ANI. There have been two more hearings since then, and a Delhi High Court Justice is now planning to read the sources used to reference the defamation lawsuit – see more in-depth coverage at "In the media". – S

Bar and Bench, an Indian source for news on the judicial system, reported that Justice Subramonium Prasad, who is hearing the Wikimedia Foundation's appeal of a possible injunction in the Asian News International case, has said that he will read the sources used to reference the alleged defamation, with a particular focus on articles published by The Caravan and The Ken. A similar article appeared in Medianama. If the sources cited support the text included in the Wikipedia article about ANI, then Justice Prasad may not impose an injunction upon the WMF.

As per the Bar and Bench report, the Justice said that "the courts in case of 19 1(a) ... have said that injunction must be exception and not the rule. Keeping that in mind, I have to then look into the question of irreparable loss, prima facie case and balance of connivance."

"I will also read the articles ... to see whether the (edits) are borne out of the articles or not. Obviously, if they are not borne out of the articles, they cannot do it [publish the claims]. Therefore, I can, to that extent, even ask them to take down those offending statements," the Court said.

The Court added that if it finds that such inference, as made in the edits, can be drawn from the articles, then it may not pass a takedown order.

However, it also wondered whether it can go into such detail at the interim stage.

"This is an understanding of the editor of what the source means. If the understanding is so defamatory that it is relying on something which actually does not mean it at all, then the person can be restrained... again the question is even if it can be understood in that way, then would the court go deeper into that aspect to come to a conclusion as to whether in no circumstances can it be construed it as that at all."

Pertinently, The Caravan and The Ken are not party to ANI's defamation suit before the High Court.

The defamation suit was filed alleging that Wikipedia was allowing defamatory edits to its page on the online encyclopedia.

The Court also said it would later examine whether Wikipedia is only an intermediary or a publisher to whom different rules will apply.

–Bar and Bench

Justice Prasad may not even have too much reading to do. As of July 1, 2024, just before ANI filed their lawsuit, there were only two references to The Caravan in the whole article about the news agency:

And just one reference to The Ken:

The page contains eight more news stories cited from Alt News, BBC, The Diplomat, The Guardian, Le Monde, Newslaundry, Outlook magazine, and Politico.

See previous Signpost coverage about the ongoing case here and here. – S

In a recent article for Boing Boing, named "From keyboard to prison cell: The dangerous side of Wikipedia editing", Ellsworth Toohey reminds us of four Wikipedia editors who have been imprisoned and one editor who has been executed for editing Wikipedia.

See List of people imprisoned for editing Wikipedia and previous coverage in the Signpost. – S

The Smiths' former frontman Morrissey laments what he considers to be inaccuracies on the Wikipedia page about him. As reported by NME, Morrissey listed the purported inaccuracies about his alleged past affiliations to two different punk rock bands: The Nosebleeds – briefly active between 1976 and 1978 – and Slaughter & the Dogs – formed in 1975 and still going. Morrissey's "Madness" missive, posted on his own website on December 1, stated:

“Wikipedia confidently list me as an ex-member of Slaughter And The Dogs, and an ex-member of The Nosebleeds. I did not ever join The Nosebleeds and I have no connection whatsoever with Slaughter And The Dogs. Is there anyone at Wikipedia intelligent enough to set the record straight? Probably not.”

But somebody has been quick enough to edit out details about both bands from Morrissey’s article, with a few users engaging in reciprocal reverts. The page, which has been white-locked for a while now, no longer contains any reference to Slaughter and The Dogs; on the other hand, phrases about Morrissey’s ties to The Nosebleeds both in the introduction and the "Early life" section are now referenced by the NME article. The Wikipedia page about the Nosebleeds had also been modified to reflect Morrissey’s claims, before user Martey reverted the edits. The article for Slaughter & the Dogs has actually stayed untouched since October 21, and never included any major reference to Morrissey.

Morrissey is not shy about controversies, and he might have a point, so Stereogum put together a lengthy investigation on his past relationships with both bands. According to their report, in John Robb’s 2006 oral history Punk Rock, Slaughter & the Dogs' guitarist Mick Rossi stated that Morrissey auditioned for the band right after their first singer, Wayne Barrett-McGrath, had departed. Morrissey recorded four demos in the process, but none of these recordings have ever surfaced, and the artist never joined the group on a stable basis.

However, the game gets trickier when discussing The Nosebleeds. The Italian edition of Rolling Stone noted that the only significant reference to that band left on Morrissey's Wikipedia page, which mentions that Morrissey had agreed to join them as the lead vocalist in November 1977, is supported by a citation of David Bret's 2004 biography Morrissey: Scandal and Passion. The Stereogum report managed to find an even older source supporting this version: Johnny Rogan's 1992 biography Morrissey & Marr: The Severed Alliance, where a mutual friend of the future Smiths leader and his fellow member Johnny Marr confirmed that Morrissey had briefly joined The Nosebleeds, while Rogan himself stated that the artist had even co-written several of the group's songs with guitarist Billy Duffy.

Duffy's website provides more evidence of Morrissey's involvement with the punk rock band, as he joined him for at least two live gigs in 1978, the latter of which was even reviewed by NME, and later recreated in the 2017 biographical film England Is Mine. To his credit, Morrissey did acknowledge this performance in his 2013 memoir Autobiography, but insisted that it was a one-off and that he was "lumbered" with the line-up for that evening being billed as The Nosebleeds.

So, while Stereogum tried their best to fact-check Morrissey's claims, it’s safe to say that the trip down his past music ventures is just as confusing as some of the various recent controversies. Still, as suggested by the magazine, if anyone manages to put the man himself "in touch with Mr. Wikipedia", maybe we can finally "set the record straight". Let us just make all those involved aware of the rules on paid editing and COI, and let a reliable source sort it all out before editing the articles again. – O

Wikipedia is under fire as mounting calls demand a rewrite of

its article on Santa Claus. They are urging the online

encyclopedia to classify the article under its biography of living persons (BLP) policy,

arguing because Claus is a real, living individual, his article

should fall under the protections designed to safeguard living

individuals from malicious portrayals. A source close to Claus

alleges he is angry that the article is not a BLP, and has said

descriptors used in the first sentence, such as legendary

and who is said to bring gifts

, cast doubt on

his existence.

A CheckUser investigation determined that a series of good faith edits aimed at correcting these disparaging misrepresentations were traced to the same IP user associated with a workshop in the North Pole. The good faith edits were quickly reverted and the good faith editors were blocked and labeled as sockpuppets, prompting accusations from many people of administrative overreach and unfair treatment. One brave elf, who has chosen to remain anonymous, said "the article undermines him and is an attack page."

It should also be noted that allegations have surfaced regarding potential conflicts of interest in a recent request for comment related to the article. We've reviewed the discussion and found no evidence to support these claims. Critics have argued that these accusations serve only to deflect from the legitimate concerns raised about the article's tone and adherence to Wikipedia's policies.

The following discussion is closed. Please do not modify it. Subsequent comments should be made on the appropriate discussion page. No further edits should be made to this discussion.

| An editor has requested comments from other editors for this discussion. Within 24 hours, this page will be added to the following list: When discussion has ended, remove this tag and it will be removed from the list. If this page is on additional lists, they will be noted below. |

Should the article on Santa Claus fall under BLP? SClausWiki (talkHo ho ho) 10:15, 21 December 2024 (UTC)

We reached out to Claus' team and Wikipedia for comment, but did

not receive a response.

I love Christmas carols, especially the old ones. Charles Dickens's story A Christmas Carol is not that old — first published in 1843 — but is written in the form of a "Christmas carol in prose", according to the title page. Its chapters are even called staves. In the first stave, a passing caroler sings a small snippet of an old carol to Scrooge. Do you know the Christmas carol sung in A Christmas Carol?

"God Rest Ye Merry, Gentlemen" goes back to the 1650s, but songs have been associated with mid-winter holidays for over 2,000 years. For example, the Roman holiday Saturnalia was associated with song, as well as wine and political incorrectness — though it should not be confused with Bacchanalia. There's even a modern Saturnalia song, sung in Latin, titled "Io, Saturnalia" (In English: "Yo, Saturnalia") which might be better to skip.

Carols are not necessarily religious, but they are almost always happy music you can dance to. "O Tannenbaum" means "Oh, fir tree" in German but is usually translated into English as "Oh, Christmas Tree". Other than the word "Christmas", the song has little to do with religion. It just praises the fir tree's "faithfulness" — its ability to stay green all Winter. In German, in French, and in English.

My favorite religious carols include:

"Good King Wenceslas" — celebrates the day after Christmas, the Feast of Stephen, and emphasizes the importance of charity (and gift-giving in general).

"It Came Upon the Midnight Clear" — a song that has lyrics from a poem of the same name, and is a very intellectual expression of the author's personal interpretation of the meaning of Christmas. It may mark his joy at the announcement of peace ending the Mexican–American War.

"O Holy Night" — sends a similar message.

Ramsey Lewis gives a jazz version of "We Three Kings".

To fully appreciate "O come all ye faithful", you need to hear it in a large, packed church with a powerful organ belting it out on Christmas Eve. The original Latin version, Adeste Fideles, can be even more powerful. Strangely, though I only know a few words of Latin, I always think of it as Venite Adoremus from the words in the chorus that translate to "Oh come let us adore (him)".

The explanation is the quirky, sprightly carol "The Snow Lay On the Ground", which also uses the words venite adoremus. The lyrics are attributed to a 19th-century Italian folk song, but three quarters of the time you just sing venite adoremus.

Another folk song, an African-American spiritual, "Go Tell It on the Mountain", is an expression of pure joy. It was first mentioned in 1901, and published in 1909.

There aren't many African-American folk songs that have become classic Christmas carols, but there is the ultimate "Christmas Song" sung and played by some of the best musicians on this page, including by the composer. [1]

And another great December song.

Modern Christmas carols and songs express many of the same themes as the earlier carols, adapted to the current state of the world. But I'm not going to link to "All I Want for Christmas is You" — you know where to find it, and you know that you have heard it enough already this year.

There are also many people who live in different circumstances in other countries, who celebrate different winter holidays, and worship in different faiths. Nobody should be left out at this time of year. We are sorry that there is not enough time to cover everybody's circumstances.

"Silver Bells" brings great memories of "Christmastime in the city". But I also have mixed feelings on its message. Is it meant to honor the Salvation Army? Or is it just an advertisement for the modern commercialized holiday that seems to start in October? Or maybe it is just a great song, in a bad movie, starring an even worse comedian?

There is no doubt that Elvis Presley's "Blue Christmas" is a great song. But sometimes I wonder if it has anything to do with Christmas.

José Feliciano's song [2] "Feliz Navidad" causes no such mixed feelings. A little bit of repetition never hurt a Christmas song.

Russia and Ukraine both have long traditions of celebrating Christmas and New Year's Day. And they share some of them.

В лесу родилась ёлочка ("In the woods is born a fir tree") is a Russian children's New Year's song. It mirrors Oh, Christmas Tree but includes a cute little bunny, an angry wolf, and most kiddy videos include Father Frost (a Slavic Santa Claus).

The music to this little Christmas dance was written by a gay Russian composer whose grandfather was born in Ukraine.

Do not be fooled by a bit of chaos at the start to this video of Ukrainian carolers.

These shared traditions only make the current war more tragic.

There are other tragedies happening right now that involve different religions that share, in part, a common heritage.

You might think it would be difficult finding a Jewish Christmas carol, but a song often called "The best selling Christmas song of all time" was written by Irving Berlin, a Jew.

Hanukkah songs include "The Dreidel Song", "8 Days (Of Hanukkah)" by Sharon Jones & the Dap-Kings [3], and "Hanukkah Rocks" [4] by The LeeVees (the last two links are to NPR's Tiny Desk Concert).

You might think there are no Muslim Christmas songs, and perhaps you are right. But Muslims are allowed to borrow the Christmas carols they like and even compose their own, just like anybody else. This is the view put forward in these two thought-provoking videos.

We all share part of our common human heritage. We all share in our common human tragedy.

As anyone paying attention to The Signpost in 2018 would have noticed, the publication was struggling. So was the team. One of the struggles that has recently cropped up again is in how to deal with reporting that involves specific members of the Wikipedia community and the wider Wikimedia movement. For example, what type of Wikimedian-specific content, if any, should we cover? Are critical pieces of specific Foundation members acceptable? What about controversies surrounding members of the community, such as chapter board members or notable Wikimedians? Is the line drawn at trawling AN/I for juicy threads, or is that acceptable, too? At what point does investigative journalism become sensationalism, or community news become gossip?

Prior issues have contained content which criticized specific people, and which reported on conflicts and controversies between particular users; reader responses have been mixed, with some condemning it, others criticizing it, and still others commending the commentary. While the support is encouraging, the criticisms, some of which are borderline personal attacks and harassment in a venue that is considered by some to be a safe haven from our Wikipedia policies, and complaints tell us where we may be falling short of the hopes and expectations of our readers.

At The Signpost, as in Wikipedia generally, the readers come first. We write for you, so your input is paramount in deciding the content of what we write; and if you write, we publish. Like the rest of Wikipedia, we also value consensus in determining what to publish—and not just the local consensus that may be achieved in the newsroom. That is why we are bringing this to you, the readers:

Please, tell us what you think in the reader comments below! We want to understand where the line is—and what you want to be reading—when it comes to reporting on controversies, conflicts, scandals, and other news involving specific members of the community. The better we do, the better we can provide the content you will want to read - or in the worst case scenario, if you wish to continue reading The Signpost at all, and whether or not the editorial team is fighting an uphill battle to keep it in print.

Finally, the editors and contributors to The Signpost would like to wish our readership and the Wikipedia community a very happy holiday season. Enjoy a well deserved break, and we'll see you after the new year.

Dec 23, 21:38 UTC

Resolved - This incident has been resolved.

Dec 23, 20:44 UTC

Monitoring - A fix has been implemented and we are

monitoring the results.

Dec 23, 20:08 UTC

Investigating - We are currently investigating

this issue.

From the parting of a mist’s ethereal cloak to the narrowing borders of a drying lake, the two winners of this year’s Wiki Loves Earth photo contest remind us of Earth’s timeless beauty and its fragility.

For more than a decade, the volunteer-organized Wiki Loves Earth has been capturing the breathtaking essence of the planet’s natural heritage. Photos of all sorts of nature, from iconic national parks to hidden gems in local green spaces, are eligible. Wiki Loves Earth’s winners fell into two categories: a “macro/close-up” category (including animals, plants, fungi) and a “landscapes” category for wider shots.

This year’s winner of the macro category captures a majestic deer emerging from a mist-shrouded forest a bit east of Rome. One of Wiki Loves Earth 2024’s judges called the deer “a scene straight out of a mystery film,” while another said that the connection between the deer and photographer Michele Illuzzi felt “supernatural.”

The equivalent winner of the landscapes category went up high to portray the scaly land that reveals Lake Burdur‘s evaporating footprint in western Turkey. A contest judge commended photographer Fatih Yılmaz’s artistry and “unusual dynamic composition” that found a balance between color and texture. This year, Wiki Loves Earth received more than 80,000 submissions from over 3,800 participants in 56 countries — the highest number of countries ever in the contest’s history. From those, 583 were selected by local jury teams and forwarded to the international competition. You can learn more about Wiki Loves Earth and its full rules on its website. Check out the nineteen other winners below.

Photo by Maksat Bisengaziyev/Максат79, CC BY-SA 4.0

Second place (landscapes): The Ustyurt Nature Reserve in the far southwestern portion of Kazakhstan supports a wide variety of fauna across its varied landscapes, which range widely in elevation. It’s a bit larger than the country of Mauritius. Photographer Maksat Bisengaziyev framed this view of a few of the reserve’s many rock formations so that there would be a diagonal line running from the bottom left to the top right. One judge noted that this technique “created depth without compromising too much of the objects’ sharpness.”

Photo by İsmail Daşgeldi/Ismailtasgeldi, CC BY-SA 4.0

Third place (landscapes): The Wiki Loves Earth judges loved İsmail Daşgeldi’s composition and sense of scale in this photo. A viewer’s eye starts at the top of the enormous and looming mountains. Their true size is slowly revealed as the eye follows the winding road to Yaylalar, a small Turkish village of just 43 people, at the bottom.

Photo by Maksat Bisengaziyev/Максат79, CC BY-SA 4.0

Fourth place (landscapes): At least one blogger has called this range the ‘tiramisu mountains’, and anyone who has had the Italian dessert would think it’s easy to see why. This is another Maksat Bisengaziyev photo from Kazakhstan, with this one coming from the Kyzylsai Regional Nature Park. “There is something majestic about the composition,” one contest judge saw. “The low light brings out the structure in the surface really well without tinting the color on the stone too much.”

Photo by İsmail Daşgeldi/Ismailtasgeld, CC BY-SA 4.0

Fifth place (landscapes): İsmail Daşgeldi’s sense of scale was on display in this photo, which backdrops the Hürmetçi Marshes with Mount Erciyes on a mid-April morning. But what puts this image over the top is the lonely animal near the center, pausing for a moment to get a drink.

Photo by Missoni Francesco/Scosse, CC BY-SA 4.0

Sixth place (landscapes): Missoni Francesco’s photo of a glacial lake in extreme northeastern Italy brings chills, and not just for the temperature. Wiki Loves Earth’s judges loved the innovative use of the lake’s reflections and the mist swirling around the mountain peaks. “Such deep blues, and in so many shades,” they added.

Photo by Marat Nadjibaev, CC BY-SA 4.0

Seventh place (landscapes): The first thing you are likely to notice in this shot of Kyrgyzstan’s Madygen Formation are the colors, resulting from lakes and rivers running their way to a nearby ocean millions of years ago. One contest judge thought that Marat Nadjibaev’s photo “truly makes one appreciate the Earth’s many unseen wonders,” while another opined that the cloudy day helped ensure that a blue sky did not detract from the rock’s colors.

Photo by Turan Reis/Turreis10700, CC BY-SA 4.0

Eighth place (landscapes): On this crisp November morning, Turan Reis got out of bed early to capture a moment in time at Karagöl-Sahara National Park in northeastern Turkey. They discovered morning mist drifting through vibrant autumn trees, with a late-year sun creating lengthy rays of light and deep shadows across a few rolling hills.

Photo by Skander Zarrad, CC BY-SA 4.0

Ninth place (landscapes): Like something out of a Bond film, a fish trap provides the frame for Skander Zarrad’s photo of a fisherman returning at daybreak to sell their catch. This scene was found in Tunisia’s Kerkennah Islands.

Photo by Ekrem Kalkan/Kecags, CC BY-SA 4.0

Tenth place (landscapes): Wiki Loves Earth’s judges commended the “fantastic perspective” of this top-down shot of Turkey’s Yedigöller National Park, which includes a wide variety of trees in an array of autumnal colors. They also loved how the viewers would be drawn through the photo by the narrow road’s meandering path.

Photo by Lukáš Kött/Luckhy86, CC BY-SA 4.0

Second place (macro): It’s a tender moment between parent and child: this Eurasian hoopoe prepares to feed its hungry offspring with a recently captured bug. Lukáš Kött took this visual poetry in South Moravia, located in the southern Czech Republic near its border with Austria. “Not only full of action, but educational too,” said one Wiki Loves Earth judge. Another photo from Lukáš Kött took tenth place in the macro category.

Photo by Mehmet Karaca/Mkrc85, CC BY-SA 4.0

Third place (macro): Mehmet Karaca’s fascinating image of two conehead mantises in Turkey’s Kapıçam Nature Park lends itself to all manner of personification. One judge who reviewed the photo found themselves humming the theme from the classic American comedy The Pink Panther. What do you see?

Photo by Lubomír Dajč, CC BY-SA 4.0

Fourth place (macro): It may look like these yellow-winged darters are taking a break from work, but they’re not old enough to fly yet. These newly hatched animals are drying out on a twig in the Czech Republic’s Žďárské vrchy protected natural area. One judge called out Lubomír Dajč’s photo for its “wonderfully crisp contours” and added that it was “oozing complementary colors”.

Photo by Anissheikh2647, CC BY-SA 4.0

Fifth place (macro): User:Anissheikh2647 helped this bonded couple of pheasant-tailed jacanas while the birds went through the difficult process of raising a chick. ” I love many aspects of this shot,” one judge said. “The color palette reinforces the composition, the sharpness of the details is just right and complements the bokeh effect, and the contrasting motion between the two birds is beautifully captured, adding levels to the image”.

Photo by Mark Kineth Casindac/Kramthenik27, CC BY-SA 4.0

Co-sixth place (macro): The first of two consecutive photos from Philippine photographer Mark Kineth Casindac, also known as User:Kramthenik27, sees these two Apodynerus flavospinosus or potter wasps hanging onto some sort of stalk in Northern Negros Natural Park. The Wiki Loves Earth judges loved the colors on display in this shot, as well as the sharpness Mark Kineth Casindac was able to obtain on the small creatures.

Photo by Mark Kineth Casindac/Kramthenik27, CC BY-SA 4.0

Co-sixth place (macro): Mark Kineth Casindac’s second shot found two leaf-cutting cuckoo bees sitting face to face in the same park. The photographer noted that these bees are endemic to the Philippines, and they can be commonly found in grassy areas. “The composition is simple and clean, but well-structured,” one contest judge noted.

Photo by Mehmet Karaca/Mkrc85, CC BY-SA 4.0

Seventh place (macro): Another photo from Mehmet Karaca, the third-place macro winner, shows that enjoying the morning sun is absolutely not limited to the human race. This baby chameleon, which is evidently no bigger than a flower, is getting a few rays in Turkey’s Kapıçam Nature Park.

Photo by Dasrath Shrestha Beejukchhen, CC BY-SA 4.0

Eighth place (macro): We do not know why this Nepalese spotted deer is in full sprint. But no matter why it got moving, photographer Dasrath Shrestha Beejukchhen ultimately benefited from the deer’s leap to get onto the path running along the right side. One judge noticed that the dots on the side of the deer were stretched—an indicator of the speed at which it was moving.

Photo by Asker Ibne Firoz, CC BY-SA 4.0

Ninth place (macro): This is not your standard nature photo. In this shot, Asker Ibne Firoz sharply captures a stationary lineated barbet chick in its nest right alongside its fast-moving mother. Wiki Loves Earth’s judges applauded the technical skill on display in this photo, with one adding that Firoz managed to take “an artistic approach” that nevertheless “retained its educational potential.” Firoz found this scene at the National Botanical Garden in Dhaka, Bangladesh.

Photo by Lukáš Kött/Luckhy86, CC BY-SA 4.0